June 25, 2024 The Hibernia San Francisco, CA

Jam-Packed Agenda

- Morning Session

- Autonomous Systems & Robotics

- Foundational/LLMs/GenAI

- Lightning Talks

- Intimate/Virtual Track

Morning Session

-

8:30AM

Registration Opens

-

9:00AM-9:30AM

Coffee & Light Breakfast

-

9:30AM-10:00AM

Opening Keynote

Welcome to the AI Quality Conference-

Mohamed Elgendy

CEO/Co-Founder, Kolena

-

Demetrios Brinkmann

Co-Founder, MLOps Community

-

-

10:00AM - 10:30AM

NEW QUALITY STANDARDS FOR AUTONOMOUS DRIVING

Fireside chat featuring Mo Elshenawy, President and CTO of Cruise Automation, and Mohamed Elgendy, CEO and Co-founder of Kolena. In this discussion, Mo Elshenawy will delve into the comprehensive AI philosophy that drives Cruise Automation. He will share unique insights into how Cruise is developing its quality standards from the ground up, with a particular focus on defining and achieving “perfect” driving. This fireside chat offers valuable perspectives on the rigorous processes involved in teaching autonomous vehicles to navigate with precision and safety.

-

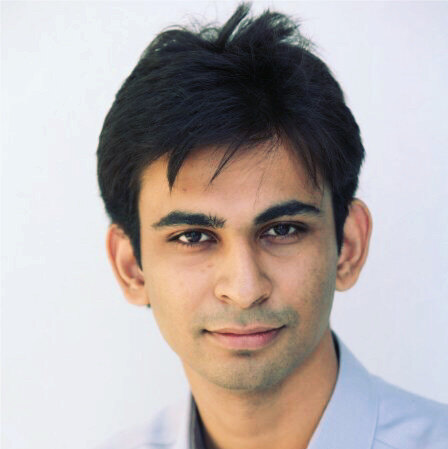

-1.jpg?width=1304&height=1302&name=Mo-ElShenawy-Headshotv2%20(3)-1.jpg)

Mo Elshenawy

President & CTO, Cruise

-

Mohamed Elgendy

CEO & Co-Founder, Kolena

-

-

10:30am - 11:00am

The dollars and cents behind the AI VC boom

Natasha will be moderating a panel of leading VCs who have backed the top AI companies and understand the correction within the boom, flight to quality and what happens when OpenAI eats your lunch, how founders should think about giving big tech a spot on their cap tables, & generally how to invest at the speed of innovation right now.

-

Natasha Mascarenhas

Natasha Mascarenhas, Reporter, The Information

-

Oana Olteanu

Partner, SignalFire

-

James Cham

VC Investor, Bloomberg Beta -

Eric Carlborg

Founding Partner, Lobby Capital

-

-

11:00 am - 11:30 am

Hunting for Quality Signals: using lightweight functions to measure data quality and identify generative AI failure modes

Kolena CPO and CO-Founder, Gordon Hart, will discuss how to best leverage AI to measure quality and find failures in unstructured datasets without ground truths or reliable metrics.

-

Gordon Hart

CPO & Co-Founder, Kolena

-

-

11:30am-12:00pm

TO RAG OR NOT TO RAG?

Retrieval-Augmented-Generations (RAG) is a powerful technique to reduce hallucinations from Large Language Models (LLMs) in GenAI applications. However, large context windows (e.g. 1M tokens for Gemini 1.5 pro) can be a potential alternative to the RAG approach. This talk contrasts both approaches and highlights when Large Context Window is a better option thank RAG, and vice-versa.

-

AMR AWADALLAH

CEO, Co-Founder, Vectara

-

-

12:00pm - 12:30 pm

EIGHTY-THOUSAND POUND ROBOTS: AI DEVELOPMENT & DEPLOYMENT AT KODIAK SPEED

Kodiak is on a mission to automate the driving of every commercial vehicle in the world. Today, Kodiak operates a nationwide autonomous trucking network 24x7x365, on the highway, in the dirt, and everywhere in between. We also release and deploy software about 30 times per day across this fleet that is not just mission critical, but also safety critical. Our AI development process must match this criticality and speed, providing fast engineering iteration while guaranteeing a high level of quality that is the requirement of safety. In this talk, we’ll share the details of that process, from how the system is architected, trained, and evaluated, to the validation CICD pipeline, which is the lifeblood of the development flywheel. We’ll talk about how we collect cases, how we iterate models, and how we do quality assurance, data, and release management - all in a way that seamlessly keeps our robots truckin’ across the US.

-

Collin Otis

Director of Autonomy, Kodiak Robotics

-

Autonomous Systems & Robotics

-

12:50 pm- 1:20 pm

Overcoming Bias in Computer Vision and Voice Recognition

This panel will explore the critical issue of bias in AI models, focusing on voice recognition and computer vision technologies, as well as the data used to power models in these domains. Discussions will cover how bias is detected, measured, and mitigated to ensure fairness and inclusivity in AI systems. Panelists will be invited to share best practices, case studies, and strategies for creating unbiased AI applications.-

Skip Everling

Head of DevRel, Kolena

-

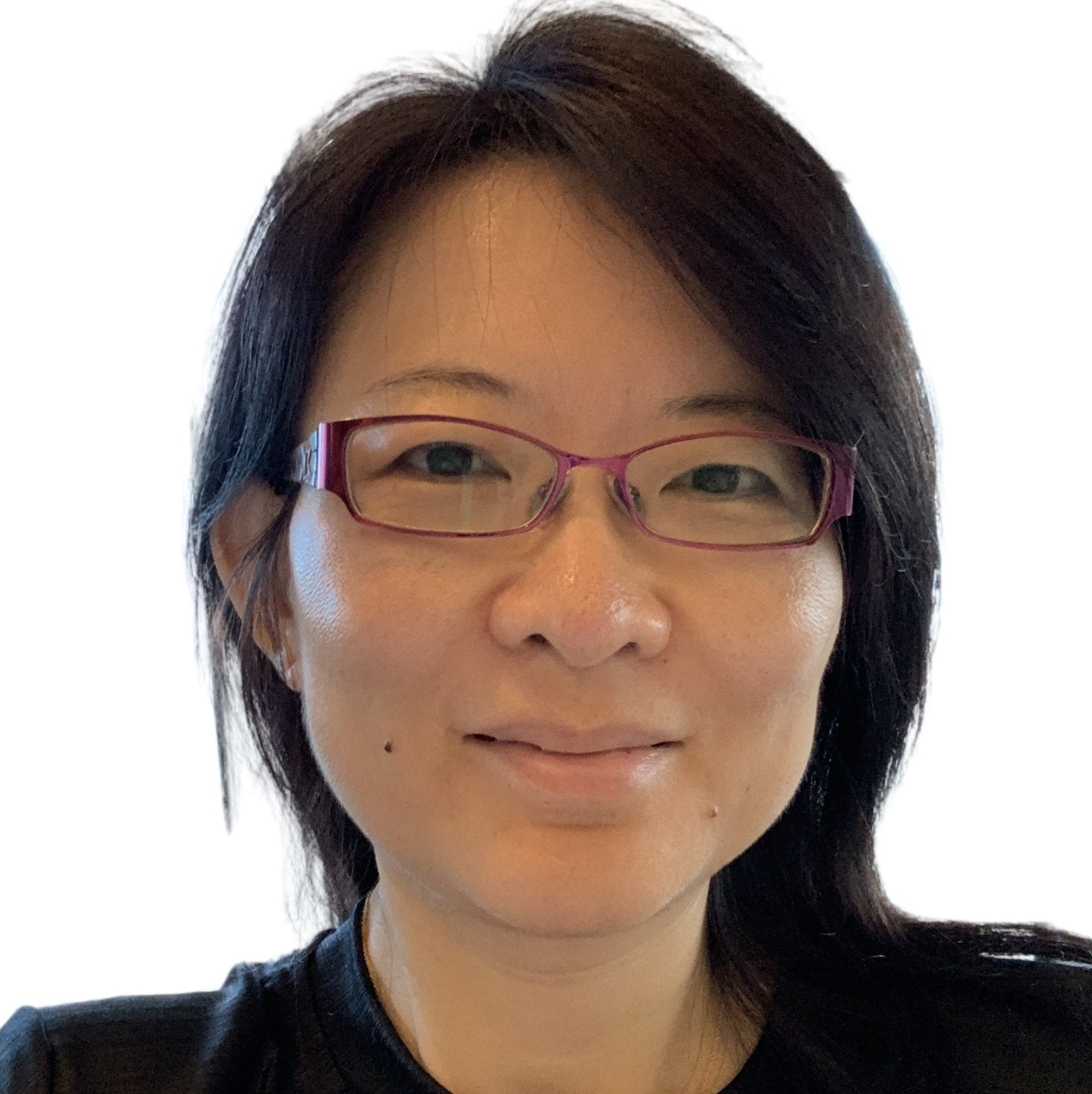

Rajpreet Thethy

Staff TPM, Data, AssemblyAI -

.jpg?width=2498&height=2498&name=dougaley_headshot%20(1).jpg)

Doug Aley

CEO, Paravision -

Peter Kant

CEO, Enabled Intelligence

-

-

1:25pm - 1:50 pm

Self Improving RAG

Higher quality retrieval isn't just about more complex retrieval techniques. Using user feedback to improve model results is a tried and true technique from the ancient days of *checks notes* recommender systems. And if you know something about the pattern about your data and user queries, even synthetic data can produce fine-tuned models that significantly improve retrieval quality

-

Chang She

CEO / Co-founder, LanceDB

-

-

1:55 PM - 2:25 PM

Generating The Invisible: Capturing and Generating Edge-cases in Autonomous Driving

Evaluating autonomous vehicle stacks (AVs) in simulation typically involves replaying driving logs from real-world recorded traffic. However, agents replayed from offline data do not react to the actions of the AV, they see only the data they have recorded, and their behavior cannot be easily controlled to simulate counterfactual scenarios. Existing approaches have attempted to address these shortcomings by proposing methods that rely on heuristics or learned generative models of real-world data but these approaches either lack realism or necessitate costly iterative sampling procedures to control the generated behaviors. In this talk I will break down how learning scene graphs can enable photo-realistic scene reconstructions and how we can leverage return-conditioned offline reinforcement learning within a physics-enhanced simulator to efficiently generate reactive and controllable traffic agents. Specifically, we process real-world driving data through the scene graph representation and the simulator to generate a diverse offline reinforcement learning dataset, annotated with various reward terms. With this dataset, we train a return-conditioned multi-agent behavior model that allows for fine-grained manipulation of agent behaviors by modifying the desired returns. This approach also allows for learned synthetic data based on these trajectories.

-

Felix Heide

Head of Artificial Intelligence, Torc Robotics

-

-

2:30pm - 2:50pm

FIRESIDE CHAT: VISION AND STRATEGIES FOR ATTRACTING & DRIVING AI TALENTS IN HIGH GROWTH

- Attracting and retaining top AI talent is essential for staying competitive. This panel will explore how to craft and communicate a compelling vision that aligns with the organization's evolving needs, inspiring potential hires and keeping current employees motivated.

- The discussion will offer actionable strategies for sourcing top talent, adapting to changing needs, and maintaining company alignment. Attendees will learn best practices for attracting AI professionals, creating an attractive employer brand, and enhancing talent acquisition and retention strategies.

- Lastly, the panel will cover structuring and organizing the AI team as it grows to ensure alignment with business goals. This includes optimal team configurations, leadership roles, and processes that support collaboration and innovation, enabling sustained growth and success.

-

Shailvi Wakhlu

Founder, Shailvi Ventures LLC

-

Olga Beregovaya

VP, AI, Smartling

-

Ashley Antonides

Associate Research Director, AI/ML, Two Six Technologies

-

2:55 pm -3:20 pm

PANEL: DATA QUALITY = QUALITY AI

Data is the foundation of Al. To ensure Al performs as expected, high-quality data is essential. In this panel discussion, Chad, Maria, Joe, and Pushkar will explore strategies for obtaining and maintaining high-quality data, as well as common pitfalls to avoid when using data for Al models.-

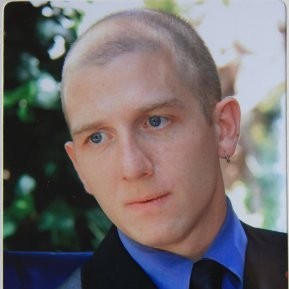

Sam Partee

CTO, Co-Founder, Arcade AI

-

.jpg?width=3247&height=3247&name=Chad%20Sanderson%20(headshot).jpg)

Chad Sanderson

CEO & Co-Founder, Gable

-

Joe Reis

CEO, Author, "Recovering Data Scientist", Nerd Herd Education -

Maria Zhang

CEO & Co-founder, Proactive AI Lab Inc -

Pushkar Garg

Staff Machine Learning Engineer, Clari Inc.

-

-

3:30 pm-3:55 pm

Growing Reliable ML/AI Systems Through Freedom and Responsibility

ML/AI systems are some of the most complex machines ever built by humankind. These systems are never built as perfectly planned cathedrals. Still, they evolve incrementally over time, carefully balancing the need to experiment freely with the responsibilities affecting real-world production systems. As developers of open-source Metaflow, we have been tiptoeing this balance for years. In this talk, we will share our recent observations from the field and provide ideas for how reliable ML/AI systems can be built over the coming years

-

Savin Goyal

Co-founder & CTO, Outerbounds

-

-

4:00 PM - 4:30 PM

Balancing speed and safety

The need for moving to production quickly is paramount in staying out of perpetual POC territory. AI is moving fast. Shipping features fast to stay ahead of the competition is commonplace.

Quick iterations are viewed as strength in the startup ecosystem, especially when taking on a deeply entrenched competitor. Each week a new method to improve your AI system becomes popular or a SOTA foundation model is released.

How do we balance the need for speed vs the responsibility of safety? Having the confidence to ship a cutting-edge model or AI architecture and knowing it will perform as tasked.

What are the risks and safety metrics that others are using when they deploy their AI systems. How can you correctly identify when risks are too large?-

Remy Thellier

Head of Growth & Strategic Partnerships, Vectice

-

%20(1).jpeg?width=1661&height=1661&name=me%20(1)%20(1).jpeg)

Erica Greene

Director of Engineering, Machine Learning, Yahoo

-

Shreya Rajpal

CEO, Guardrails

-

Claire Vo

Chief Product Officer, LaunchDarkly

-

-

4:40 pm- 5:05 pm

Open Model and its curation over Kubernetes

Open Generative AI (GenAI) models are transforming the AI landscape. But which one is right for your project? What are the quality metrics for one to evaluate his/her own trained model? For application developers and AI practitioners enhancing their applications with GenAI, it’s critical to choose and evaluate the model that meets both quality and performance requirements.

This talk will examine customer scenarios and discuss the model selection process. We will explore the current landscape of open models and collection mechanisms to measure model quality. We will share insights from Google’s experience. Join us to learn about model metrics and how to measure them.

-

Cindy Xing

Software Engineering Manager, Google

-

Foundational Models, LLMs, and GenAI

-

12:50PM - 1:20PM

A BLUEPRINT FOR SCALABLE & RELIABLE ENTERPRISE AI/ML SYSTEMS

Enterprise AI leaders continue to explore the best productivity solutions that solve business problems, mitigate risks and increase efficiency. Building reliable and secure AI/ML systems requires following industry standards, an operating framework, and best practices that can accelerate and streamline the scalable architecture that can produce expected business outcomes.

This session, featuring veteran practitioners, focuses on building scalable, reliable and quality AI and ML systems for the enterprises.-

Hira Dangol

VP AI/ML & Automation, Bank of America

-

Rama Akkiraju

VP Enterprise AI/ML, NVIDIA

-

Nitin Aggarwal

Sr. Director, Generative AI, Microsoft

-

Steven Eliuk

VP AI & Governance, IBM

-

-

1:25pm - 1:50pm

The new AI Stack with Foundation models

How has the ML engineering stack changed with foundation models? While the generative AI landscape is still rapidly evolving, some patterns have emerged. This talk discusses these patterns. Spoilers: the principles of deploying ML models into production remain the same, but we’re seeing many new challenges and new approaches. This talk is the result of my survey of 900+ open source AI repos and discussions with many ML platform teams, both big and small.

-

Chip Huyen

VP of AI & OSS, Voltron Data

-

-

2:00 pm- 2:25 pm

FIRESIDE CHAT: CALIFORNIA AND THE FIGHT OVER AI REGULATION

-

Scott Wiener

California State Senator

-

Gerrit De Vynck

Tech reporter, The Washington Post

-

-

2:25 pm- 2:50 pm

EVALUATION OF ML SYSTEMS IN THE REAL WORLD

Evaluation seeks to assess the quality, reliability, latency, cost, and generalizability of ML systems, given assumptions about operating conditions in the real world. That is easier said than done! This talk presents some of the common pitfalls that ML practitioners ought to avoid and makes the case for tying model evaluation to business objectives.

-

Mohamed El-Geish

CTO & Co-Founder, Monta AI

-

-

2:55PM - 3:25PM

Evaluating evaluations

Since our first LLM product a year and a half ago, Notion's AI team has learned a lot about evaluating LLM-based systems through the full life cycle of a feature, from ideation and prototyping to production and iteration, from single-shot text completion models to agents. In this talk, rather than focus on the nitty-gritty details of specific evaluation metrics or tools, I'll share the biggest, most transferable lessons we learned about evaluating frontier AI products, and the role eval plays in Notion's AI team, on our journey to serving tens of billions of tokens weekly today.

-

Linus Lee

Research Engineer, Notion

-

-

3:30PM - 3:55PM

Do Re Mi for Training Metrics: Start at the Beginning

Model quality/performance is the only true end-to-end metric of model training performance and correctness. But it is usually far too slow to be useful from a production point of view. It tells us what happened with training hours or days ago, when we have already suffered some kind of a problem. To improve the reliability of training we will want to look for short-term proxies for the kinds of problems we experience in large model training.

This talk will identify some of the common failures that happen during model training and some faster/cheaper metrics that can serve as reasonable proxies of those failures.-

Todd Underwood

Research Platform Reliability Lead, Open AI

-

-

4:00PM - 4:25PM

Integrating LLMs into products

Learn about best practices when integrating Large Language Models (LLMs) into product development. We will discuss the strengths of modern LLMs like Claude and how they can be leveraged to enable and enhance various applications. The presentation will cover simple prompting strategies and design patterns that facilitate the effective incorporation of LLMs into products.

-

Emmanuel Ameisen

Research Engineer, Anthropic

-

-

4:30PM - 4:55PM

FROM PREDICTIVE TO GENERATIVE: UBER'S JOURNEY

Today, Machine Learning (ML) plays a key role in Uber’s business, being used to make business-critical decisions like ETA, rider-driver matching, Eats homefeed ranking, and fraud detection. As Uber’s centralized ML platform, Michelangelo has been instrumental in driving Uber’s ML evolution since it was first introduced in 2016. It offers a set of comprehensive features that cover the end-to-end ML lifecycle, empowering Uber’s ML practitioners to develop and productize high-quality ML applications at scale.

-

Kai Wang

Lead PM, AI Platform, UBER

-

Raajay Viswanathan

Software Engineer, UBER

-

Lightning Talks

-

12:20 pm

Mitigating Hallucinations and Inappropriate Responses in RAG Applications

In this talk, we’ll introduce the concept of guardrails and discuss how to mitigate hallucinations and inappropriate responses in customer-facing RAG applications, before they are displayed to your users.

While prompt engineering is great, as you add more guidelines to your prompt (“do not mention competitors”, “do not give financial advice”, etc.), your prompt gets longer and more complex, and the LLM’s ability to follow all instructions accurately rapidly degrades. If you care about reliability, prompt engineering is not enough.

-

Alon Gubkin

CTO & Co-Founder, Aporia

-

-

12:40 pm

BUILDING ADVANCED QUESTION-ANSWERING AGENTS OVER COMPLEX DATA

Large Language Models (LLMs) are revolutionizing how users can search for, interact with, and generate new content, leading to a huge wave of developer-led, context-augmented LLM applications. Some recent stacks and toolkits around Retrieval-Augmented Generation (RAG) have emerged, enabling developers to build applications such as chatbots using LLMs on their private data.

However, while setting up basic RAG-powered QA is straightforward, solving complex question-answering over large quantities of complex data requires new data, retrieval, and LLM architectures. This talk provides an overview of these agentic systems, the opportunities they unlock, how to build them, as well as remaining challenges.

-

Jerry Liu

CEO, LlamaIndex

-

-

1:00 pm

The Power of Small Language Models: Compact Designs for Big Impact

Small language models (SLMs) are taking the industry by storm. But why would you use them? In this talk, we will uncover the secrets behind crafting compact language models that pack a powerful punch. Despite their reduced size, these models are capable of achieving remarkable performance across a wide range of tasks, especially with RAG (Retrieval-Augmented Generation). We will delve into the innovative techniques and architectures that enable us to compress and optimize language models without sacrificing their effectiveness.

-

Joshua Alphonse

Director of Developer Relations, PremAI

-

-

1:20 pm

Building Safer AI: Balancing Data Privacy with Innovation

The balance between AI innovation and data security and privacy is a major challenge for ML practitioners today. In this talk, I’ll discuss policy and ethical considerations that matter for those of us building ML and AI solutions, in particular around data security, and describe ways to make sure your work doesn’t create unnecessary risks for your organization. It is possible to create incredible advances in AI without risking breaches of sensitive data or damaging customer confidence, by using planning and thoughtful development strategies.

-

Stephanie Kirmer

Senior Machine Learning Engineer, DataGrail

-

-

1:40 pm

How to take control of your RAG results

What is the state of the art on RAG quality evaluation? How much attention should you pay to embedding model benchmarks? How to establish and evaluate objectives for your information retrieval system before and after you launch?

The journey to Quality AI starts with measurement. The second, third and 100th step are then an iteration and improvement against that measurement - what can we learn from the search & relevance industry that has been around for decades? What challenges are specific to embedding powered retrieval? And how to actually improve your vector search?

Let's talk about it!-

Daniel Svonava

CEO, Superlinked

-

-

2:00 PM

AIOps, MLOps, DevOps, Ops: Enduring Principles and Practices

It may be hard to believe, but AI apps powered by big Transformers are not actually the first complex system that engineers have dared to try to tame. In this talk, I will review one thread in the history of these attempts, the "ops" movements, beginning in mid-20th-century Japanese factories and passing, through Lean startups and the leftward shift of deployment, to the recent past of MLOps and the present future of LLMOps/AIOps. I will map these principles, from genchi genbustu and poka yoke to observability and monitoring, onto emerging practices in the operationalization of and quality management for AI systems.

-

Charles Frye

AI Engineer, Modal Labs

-

-

2:35 pm

GraphRAG: Enriching RAG conversations with knowledge graphs

By integrating LLM-based entity extraction into unstructured data workflows, knowledge graphs can be created which illustrate the named entities and relationships in your content.

Kirk will discuss how knowledge graphs can be leveraged in RAG pipelines to provide greater context for the LLM response, as well as utilizing entity extraction for better content filtering.

Using GraphRAG, developers can build enriched user experiences - pulling data from a wide variety of sources, not just what is accessible with standard RAG vector search retrieval.-

Kirk Marple

Founder, CEO, Graphlit

-

-

2:55 pm

Evaluating LLM Tool use: A Survey

LLMs are being becoming more integrated with the traditional software stack through use of structured outputs. Combining structured outputs with planning & reasoning gives rise to tool use/function calling.

The LLM is in charge of deciding when to use which tool, generating a correct call signature and parsing the unstructured/structured output generated by tool invocation.

How do we know if an LLM is doing a good job? How can we compare various LLMs for our specific tool use use cases on metrics like speed, cost & accuracy?

In this talk I'll go into evaluation strategies that are being used in practice and how we think about evaluation of tool use at Groq.

-

Rick Lamers

AI Engineer & Researcher, Groq Inc.

-

-

3:20 pm

Learning from our past mistakes, or how to containerize the AI pipeline

Over the past ten years, containers have completely transformed how we ship cloud-native applications. By containerizing our apps, we have decoupled them from the chaos of a fragmented and fast-moving ecosystem. Tools and platforms come and go, but the container standard remains, making us all more productive. So why haven’t we done the same for our AI pipelines? Why can’t they, too, be decoupled from the chaos? What will it take to get rid of the sentence: “it works on my machine”? What is holding us back? Solomon will tell the origin story of containerization; draw parallels between the early days of Cloud computing and today’s AI gold rush; and show how to avoid repeating the mistakes of the past. Who knows, there may even be a demo!

-

Solomon Hykes

Cofounder & CEO, Dagger.io

-

-

3:35 PM

Beyond Benchmarks: Measuring Success for Your AI Initiatives

Join us as we move beyond benchmarks and explore a more nuanced take on model evaluation and its role in the process of specializing models. We'll discuss how to ensure that your AI model development aligns with your business objectives and results, while also avoiding common pitfalls that arise when training and deploying. We'll share tips on how to design tests and define quality metrics, and provide insights into the various tools available for evaluating your model at different stages in the development process.

-

Salma Mayorquin

CEO, Remyx AI

-

-

3:55 pm

Calibrating the Mosaic Evaluation Gauntlet

A good benchmark is one that clearly shows which models are better and which are worse. The Databricks Mosaic Research team is dedicated to finding great measurement tools that allow researchers to evaluate experiments. The Mosaic Evaluation Gauntlet is our set of benchmarks for evaluating the quality of models and is composed of 39 publicly available benchmarks split across 6 core competencies: language understanding, reading comprehension, symbolic problem solving, world knowledge, commonsense, and programming. In order to prioritize the metrics that are most useful for research tasks across model scales, we tested the benchmarks using a series of increasingly advanced models.

-

Theresa Barton

Research Scientist, Databricks

-

-

4:15 pm

Building Robust and Trustworthy Gen AI Products: A Playbook

A practitioner's take on how you can consistently build robust, performant, and trustworthy Gen. AI products, at scale. The talk will touch on different parts of the Gen. AI product development cycle covering the must-haves, the gotchas, and insights from existing products in the market.

-

Faizaan Charania

Senior Product Manager, ML, LinkedIn

-

-

4:30 pm

Unlocking Trust: Enhancing AI Quality for Content Understanding in Visual Data

In this presentation, we'll unpack how multimodal AI enhances content understanding of visual data, which is pivotal for ensuring quality and trust in digital communities. Through our work to date, we find that striking a balance between model sophistication and transparency is key to fostering trust, alongside the ability to swiftly adapt to evolving user behaviors and moderation standards. We'll discuss the overall importance of AI quality in this context, focusing on interpretability and feedback mechanisms needed to achieve these goals. More broadly, we'll also highlight how AI quality forms the foundation for the transformative impact of multimodal AI in creating safer digital environments.

-

Florent Blachot

VP of Data Science & Engineering, Fandom

-

William A. Gaviria Rojas

Co-Founder, Coactive

-

-

12:00 pm

THE ONLY REAL MOAT FOR GENERATIVE AI: TRUSTED DATA

Your leadership team is bullish about gen AI — great! So how do you get started? As open source AI models become more commoditized, the AI pecking order will quickly favor those companies that can lean into their first-party proprietary data the most effectively. And too often, teams rush headfirst into RAG, fine-tuning and LLM production while ignoring their biggest “moat”: the reliability of the data itself. Barr Moses, Co-Founder and CEO of Monte Carlo, will explain how data trust can be baked into your team’s gen AI strategy from day one with the right infrastructure, team structure, SLAs and KPIs, and more to successfully drive value and get your AI pipelines off the ground.

-

Barr Moses

CEO and Co-founder, Monte Carlo

-

-

12:30 pm

A Systematic Approach to Improve Your AI Powered Applications

LLM-powered applications unlock capabilities previously unattainable. While they may seem magical, LLMs are essentially black boxes controlled by a few hyperparameters, which can lead to unreliability, such as hallucinations and undesirable responses. To maximize the benefits of LLMs and deliver high-quality user experiences, it's essential to implement a system for regular monitoring, measurement, and evaluation. In this talk, I will present a straightforward approach for developing a minimal system that helps application developers continuously monitor and evaluate the performance of their applications.

-

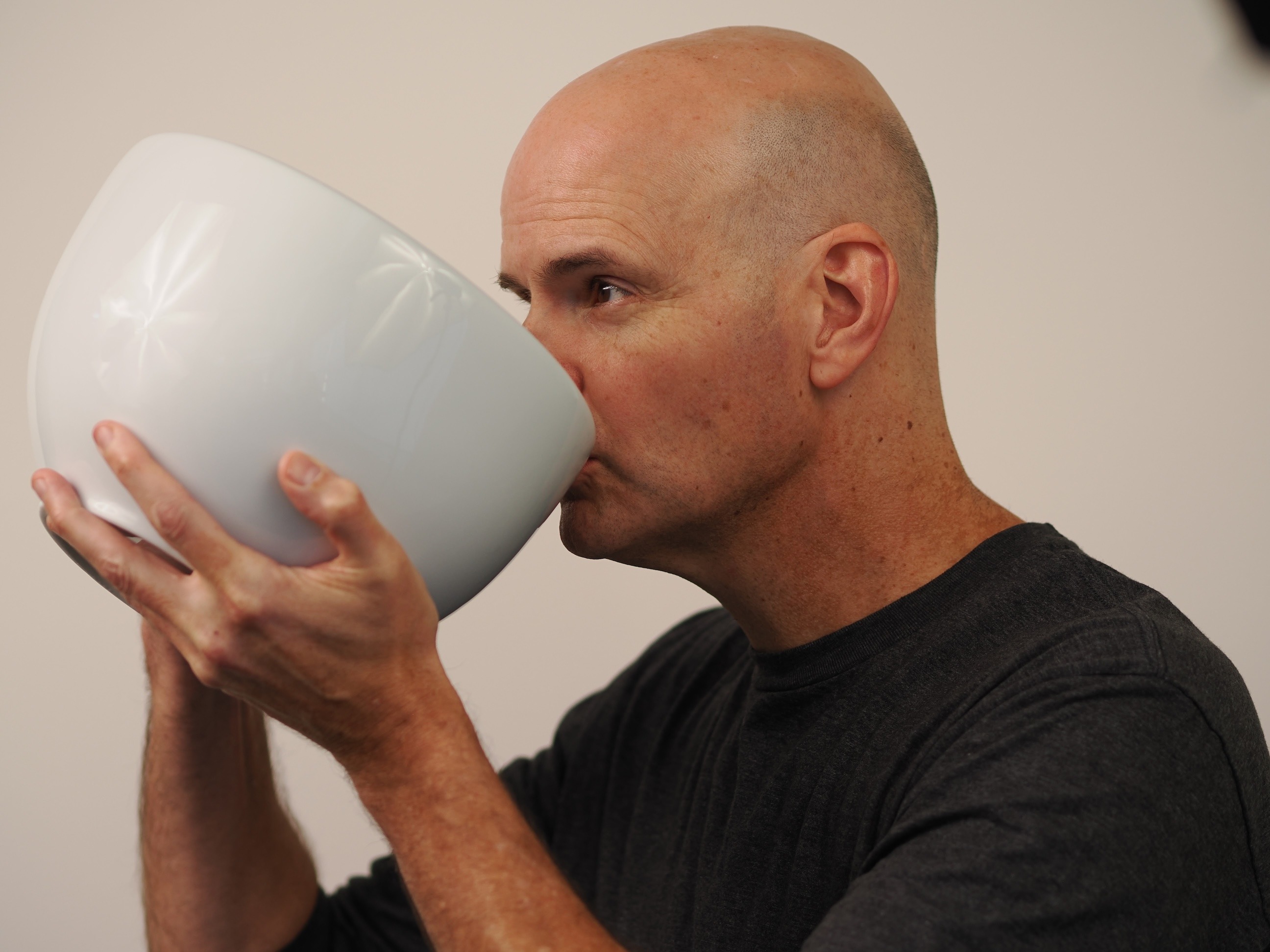

.jpeg?width=2846&height=2869&name=karthik%20(1).jpeg)

Karthik Kalyanaraman

Co-founder and CTO, Langtrace AI - A Scale3 Labs Product

-

-

12:45 pm

AI-Driven Code Generation and Website Builders

In the coming days designers, marketers, and business folks will just need one prompt. AI will do the rest: crafting interfaces, backend, all needed integrations… At the same time, no-code market already has too many unrelated tools. So, what's next? How do we deal with this?

-

Patryk Pijanowski

CEO & Co-founder, Codejet

-

-

1:00 pm

Building a Product Optimization Loop for Your LLM Features

A collaborative, self-reinforcing product optimization loop for your LLM features that empowers you to ship with confidence.

If that sounds less believable than full AGI at this point, we hear your. Freeplay aims to change that by building a platform to enable this exact kind of optimization cycle. A platform that facilitates collaboration between Product, Eng and Ops so a whole team can monitor, experiment, test, and deploy their LLM products as a team in a repeatable and reliable way.

All while equipping the team with what they need to build expertise around LLM development and learn how to move your metrics in the right direction.

-

Jeremy Silva

AI Engineer, Freeplay

-

-

1:15 pm

Why You Cannot Ignore Foundational Data Systems When Building Reliable Multimodal AI Solutions

Other than vector databases, the data systems have largely remained the same for the past few decades, and are now being repurposed to serve a fundamentally different workload, namely multimodal AI. Even if we ignore the technical debt and inefficiencies, which is not advisable, these data solutions do not provide easy ways to visualize and inspect data, track its provenance, or keep it consistent, even though this data now serves as a foundation for increasingly critical AI use cases. With years of experience studying various multimodal data types across various industries, and building a database to cater to these requirements, we have learnt some best practices and how to avoid pitfalls. These lessons would be the premise of this talk.

-

Vishakha Gupta

Cofounder/CEO, ApertureData

-

-

1:30 pm

Less is not more: How to serve more models efficientlyWhile building content generation platforms for filmmakers and marketers, we learnt that professional creatives need personalized on-brand AI tools. However, running generative AI models at scale is incredibly expensive and most models suffer from throughput and latency constraints that have negative downstream effects on product experiences. Right now we are building infrastructure to help organizations developing generative AI assets train and serve models more cheaply and efficiently than was possible before, starting with visual systems.

-

Julia Turc

Co-CEO, Storia AI

-

-

1:45 pm

Simulation-driven development for AI applications

Testing for GenAI applications has required us to rethink the way we measure performance. With the RewardModel leaderboard and other evaluator model improvements, the community is beginning to find a more stable basis for measuring performance. The roadblock for the future now is - where can we trust our evaluators to tell us the truth? Simulations are an old concept from manufacturing & autonomous driving that might hold the final key to unlocking comprehensive testing for AI. In this talk, I will discuss how simulation-driven design can supercharge AI app reliability. We'll also discuss some practical examples on how you can use simulations to 10x your iteration speed and application reliability today.-

Dhruv Singh

Co-founder & CTO, HoneyHive AI

-

-

2:00 pm

Smart Talent Acquisition in the Silicon Savannah

Africa's rapidly evolving tech ecosystem is driving innovation in workforce management. Pariti, a pioneering job board for African startups, leverages a cutting-edge LLM-based recommendation system to vet candidates from diverse backgrounds and locations. How do we normalize CVs that range from 1 page to 27 pages? How can we assist a startup in South Africa in recruiting talent as they expand to Nigeria? How do we identify senior candidates for technologies that have only been available for a few years?

From selecting a training dataset that accurately represents the workforce of the Silicon Savannah to developing a recruitment recommendation system, we will discuss the foundational concepts behind building an AI vetting system that prioritizes quality, representativeness, and feasibility.

-

Chiara Stramaccioni

Data Scientist, Pariti

-

-

2:15 pm

Transforming Evaluation from Intuition to Precision

In the evolving landscape of AI, moving beyond intuitive "vibe checks" to precise evaluation is critical for advancing the reliability and performance of AI systems. In this talk, "Transforming Evaluation from Intuition to Precision," we explore the current shortcomings of standardized benchmarks and the impracticality of human evaluations for AI applications generating free-form text. We'll discuss the urgent need for automated, task-specific evaluation methods and envision a future where rigorous, data-driven metrics ensure AI systems not only perform well but also inspire trust and widespread adoption.

-

Aubrey Kayla

CTO, RAGmetrics.ai

-

-

2:30 pm

Full RAG: Driving Quality with Hyperpersonalization

Achieving high-quality, AI-powered personalization with traditional RAG (Retrieval-Augmented Generation) alone is challenging, especially when it comes to incorporating real-time and user-specific context. Derek Salama, Principal Product Manager at Tecton, has a better way: In this talk, he introduces "Full RAG", an evolution of the traditional RAG architecture that leverages real-time and user-specific data to deliver high-quality, hyper-personalized AI responses.

By enriching prompts with real-time user data and contextual signals, Full RAG enables AI models to generate tailored recommendations with Large Language Models (LLMs) that adapt to users' evolving preferences and behaviors. Using a hypothetical travel application as an example, Derek will demonstrate how this new architecture can help your team build products with personalized experiences like never before.

To make building these experiences as simple as possible, Derek will introduce Tecton's feature platform. He'll demonstrate how Tecton makes it easy to bring batch, streaming, and real-time data to LLMs in production, enabling your team to deliver hyper-personalized recommendations in customer-facing AI applications.-

Derek Salama

Principal Product Manager, Tecton

-

-

3:00 PM

RAG Evaluation using Ragas

Retrieval Augmented Generation (RAG) enhances chatbots by incorporating custom data in the prompt. Using large language models (LLMs) as judge has gained prominence in modern RAG systems. This talk will demo Ragas, an open source automation tool for RAG evaluations. I'll talk about and demo evaluating a RAG pipeline using Milvus and RAG metrics like context F1-score and answer correctness.

-

Christy Bergman

Developer Advocate, Zilliz

-

-

3:15 pm

Effective workflows for building production-ready LLM apps

Going from an idea to an LLM application that is actually production-ready (i.e., high-quality, trustworthy, cost-effective) is difficult and time-consuming. In particular, LLM applications require iterative development driven by experimentation and evaluation, as well as navigating a large design space (with respect to model selection, prompting, retrieval augmentation, fine-tuning, and more). The only way to build a high-quality LLM application is to iterate and experiment your way to success, powered by data and rigorous evaluation; it is essential to then also observe and understand live usage to detect issues and fuel further improvement. In this talk, we cover the prototype-evaluate-improve-observe workflow that we’ve found to work well, and actionable insights as to how to apply this workflow in practice.

-

Ariel Kleiner

CEO and Founder, Inductor

-

-

3:30 pm

Entity Resolved Knowledge Graphs

Knowledge graphs have spiked recently in popular use, for example in _retrieval augmented generation_ (RAG) methods used to mitigate hallucination in LLMs. Graphs emphasize _relationships_ in data, adding _semantics_ — more so than with SQL or vector databases. However, data quality issues can degrade linking during KG construction and updating, which makes downstream use cases inaccurate and defeats the point of using a graph. When you have join keys (unique identifiers) building relationships in a graph may be straightforward, although false positives (duplicate nodes) can result from: typos or minor differences in attributes like name, address, phone, etc.; family members sharing email; duplicate customer entries, and so on. This talk describes what an _Entity Resolved Knowledge Graph_ is, why it's important, plus patterns for deploying _entity resolution_ (ER) which are proven to work. We'll cover how to make graphs more meaningful in data-centric architectures by repairing connected data:

- Unify connected data from across multiple data sources.

- Consolidate duplicate nodes and reveal hidden connections.

- Create more accurate, intuitive graphs which provide greater downstream utility for AI applications.

-

Paco Nathan

Principal DevRel Engineer, Senzing

-

3:45 pm

Enterprise AI Governance: A Comprehensive Playbook

This talk presents a comprehensive overview of enterprise AI governance, highlighting its importance, key components, and practical implementation stages. As AI systems become increasingly prevalent in business operations, organizations must establish robust governance frameworks to mitigate risks, ensure compliance, and foster responsible AI innovation. I define AI governance, and articulates its relationship to AI risks and a common set of emerging regulatory requirements. I then outline a three-stage approach to enterprise AI governance: organization-level governance, intake, and ongoing governance. At each stage I give examples of actions that support effective oversight, and articulate how they are actually operationalized in practice.

-

Ian Eisenberg

Head of AI Governance Research, Credo AI

-

-

4:00 pm

Designing data quality for AI-usecase

Often, the data stored for AI workloads is in its raw formats and stored in data lakes with open formats. This talk will focus on designing a data quality strategy for these raw formats

-

Mona Rakibe

Cofounder and CEO, Telmai

-